Analysis of Experiment 1

Last updated: 2021-12-10

Checks: 7 0

Knit directory: social_immunity/

This reproducible R Markdown analysis was created with workflowr (version 1.6.2). The Checks tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

Great! Since the R Markdown file has been committed to the Git repository, you know the exact version of the code that produced these results.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20191017) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great job! Using relative paths to the files within your workflowr project makes it easier to run your code on other machines.

These are the previous versions of the repository in which changes were made to the R Markdown (analysis/experiment1.Rmd) and HTML (docs/experiment1.html) files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view the files as they were in that past version.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 2df98e2 | lukeholman | 2021-12-10 | wflow_publish(“analysis/experiment1.Rmd”) |

| html | 9ab1b50 | lukeholman | 2021-12-10 | Build site. |

| Rmd | 3c83f34 | lukeholman | 2021-12-10 | wflow_publish(“analysis/experiment1.Rmd”) |

| html | b50daba | lukeholman | 2021-04-28 | Build site. |

| Rmd | 335743d | lukeholman | 2021-04-28 | wflow_publish(“analysis/experiment1.Rmd”) |

| html | fbfc820 | lukeholman | 2021-04-28 | Build site. |

| Rmd | be8266d | lukeholman | 2021-04-28 | wflow_publish("analysis/*") |

| html | af7a3ea | lukeholman | 2021-04-27 | Build site. |

| html | 3e419d1 | lukeholman | 2021-04-27 | Build site. |

| Rmd | 43d5c2f | lukeholman | 2021-04-27 | Behav Ecol version |

| html | 5bd1725 | lukeholman | 2021-01-12 | Build site. |

| Rmd | 62a950c | lukeholman | 2021-01-12 | mostly ready |

| html | 9ebe5df | lukeholman | 2021-01-12 | Build site. |

| Rmd | 9c79d22 | lukeholman | 2021-01-12 | mostly ready |

| html | a9799c8 | lukeholman | 2021-01-11 | Build site. |

| Rmd | 36cb008 | lukeholman | 2021-01-11 | wflow_publish(“analysis/experiment1.Rmd”, republish = TRUE) |

| html | 939ecd0 | lukeholman | 2021-01-11 | Build site. |

| Rmd | 78386bb | lukeholman | 2021-01-11 | tweaks 2021 |

| html | eeb5a09 | lukeholman | 2020-11-30 | Build site. |

| Rmd | 7aa69df | lukeholman | 2020-11-30 | Added simple models |

| html | e96fff4 | lukeholman | 2020-08-21 | Build site. |

| Rmd | 6fbe4a8 | lukeholman | 2020-08-21 | Resize figure |

| html | 7131f65 | lukeholman | 2020-08-21 | Build site. |

| Rmd | c80c978 | lukeholman | 2020-08-21 | Fix summarise() warnings |

| html | 4f23e70 | lukeholman | 2020-08-21 | Build site. |

| Rmd | c5c8df4 | lukeholman | 2020-08-21 | Minor fixes |

| html | 1bea769 | lukeholman | 2020-08-21 | Build site. |

| Rmd | d1dade3 | lukeholman | 2020-08-21 | added supp material |

| html | 6ee79e9 | lukeholman | 2020-08-21 | Build site. |

| Rmd | 7ec6dde | lukeholman | 2020-08-21 | remake before submission |

| html | 7bf607f | lukeholman | 2020-05-02 | Build site. |

| Rmd | 83fa522 | lukeholman | 2020-05-02 | tweaks |

| html | 3df58c2 | lukeholman | 2020-05-02 | Build site. |

| html | 2994a41 | lukeholman | 2020-05-02 | Build site. |

| Rmd | 9124fb8 | lukeholman | 2020-05-02 | added Git button |

| html | d166566 | lukeholman | 2020-05-02 | Build site. |

| html | fedef8f | lukeholman | 2020-05-02 | Build site. |

| html | 4cb9bc1 | lukeholman | 2020-05-02 | Build site. |

| Rmd | 14377be | lukeholman | 2020-05-02 | tweak colours |

| html | 14377be | lukeholman | 2020-05-02 | tweak colours |

| html | fa8c179 | lukeholman | 2020-05-02 | Build site. |

| Rmd | f188968 | lukeholman | 2020-05-02 | tweak colours |

| html | 2227713 | lukeholman | 2020-05-02 | Build site. |

| Rmd | f97baee | lukeholman | 2020-05-02 | Lots of formatting changes |

| html | f97baee | lukeholman | 2020-05-02 | Lots of formatting changes |

| html | 1c9a1c3 | lukeholman | 2020-05-02 | Build site. |

| Rmd | 3d21d6a | lukeholman | 2020-05-02 | wflow_publish("*", republish = T) |

| html | 3d21d6a | lukeholman | 2020-05-02 | wflow_publish("*", republish = T) |

| html | 93c487a | lukeholman | 2020-04-30 | Build site. |

| html | 5c45197 | lukeholman | 2020-04-30 | Build site. |

| html | 4bd75dc | lukeholman | 2020-04-30 | Build site. |

| Rmd | 12953af | lukeholman | 2020-04-30 | test new theme |

| html | d6437a5 | lukeholman | 2020-04-25 | Build site. |

| html | e58e720 | lukeholman | 2020-04-25 | Build site. |

| html | 2235ae4 | lukeholman | 2020-04-25 | Build site. |

| Rmd | 99649a7 | lukeholman | 2020-04-25 | tweaks |

| html | 99649a7 | lukeholman | 2020-04-25 | tweaks |

| html | 4b72d58 | lukeholman | 2020-04-24 | Build site. |

| Rmd | 67c3145 | lukeholman | 2020-04-24 | tweaks |

| html | 0ede6e3 | lukeholman | 2020-04-24 | Build site. |

| Rmd | a1f8dc2 | lukeholman | 2020-04-24 | tweaks |

| html | a1f8dc2 | lukeholman | 2020-04-24 | tweaks |

| html | 8c3b471 | lukeholman | 2020-04-21 | Build site. |

| Rmd | 1ce9e19 | lukeholman | 2020-04-21 | First commit 2020 |

| html | 1ce9e19 | lukeholman | 2020-04-21 | First commit 2020 |

| Rmd | aae65cf | lukeholman | 2019-10-17 | First commit |

| html | aae65cf | lukeholman | 2019-10-17 | First commit |

Load data and R packages

# All but 1 of these packages can be easily installed from CRAN.

# However it was harder to install the showtext package. On Mac, I did this:

# installed 'homebrew' using Terminal: ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

# installed 'libpng' using Terminal: brew install libpng

# installed 'showtext' in R using: devtools::install_github("yixuan/showtext")

library(showtext)

library(brms)

library(lme4)

library(bayesplot)

library(tidyverse)

library(gridExtra)

library(kableExtra)

library(bayestestR)

library(tidybayes)

library(cowplot)

library(car)

source("code/helper_functions.R")

# set up nice font for figure

nice_font <- "Lora"

font_add_google(name = nice_font, family = nice_font, regular.wt = 400, bold.wt = 700)

showtext_auto()

exp1_treatments <- c("Intact control", "Ringers", "LPS")

durations <- read_csv("data/data_collection_sheets/experiment_durations.csv") %>%

filter(experiment == 1) %>% select(-experiment)

durations <- bind_rows(durations, tibble(hive = "SkyLab", observation_time_minutes = 90))

# Note that we here merge the heat-treated LPS data with the LPS data.

# This change was made because there is no difference in the response variables between the two LPS treatments (heated and standard LPS), and because all evidence suggests that heat treatment does not do anything to the LPS; see the earlier version of this paper on bioarXiv for complete results without merging these treatments and discussion of why we included two LPS treatments (short version: immunologists often have heat-treated LPS as a control, even though this does not make any sense because LPS is notoriously heat-stable, and an immunologist colleague told us to include this control before we knew about this).

# The original manuscript was rejected by 2 journals, in part because the reviewers were baffled by the inclusion of two different LPS treatments, and so we merged the LPS for simplicity in this version. The results are not changed in any way (they're jsut simpler as there are now 3 treatments instead of 4 in experiment 1) - see the bioarXiv version for comparison.

outcome_tally <- read_csv(file = "data/clean_data/experiment_1_outcome_tally.csv") %>%

mutate(treatment = replace(treatment, treatment == "Heat-treated LPS", "LPS")) %>% # merge the 2 LPS treatments

group_by(hive, treatment, outcome) %>%

summarise(n = sum(n), .groups = "drop") %>%

mutate(outcome = replace(outcome, outcome == "Left of own volition", "Left voluntarily")) %>%

mutate(outcome = factor(outcome, levels = c("Stayed inside the hive", "Left voluntarily", "Forced out")),

treatment = factor(treatment, levels = exp1_treatments)) %>%

arrange(hive, treatment, outcome)

# Re-formatted version of the same data, where each row is an individual bee. We need this format to run the brms model.

data_for_categorical_model <- outcome_tally %>%

mutate(id = 1:n()) %>%

split(.$id) %>%

map(function(x){

if(x$n[1] == 0) return(NULL)

data.frame(

treatment = x$treatment[1],

hive = x$hive[1],

outcome = rep(x$outcome[1], x$n))

}) %>% do.call("rbind", .) %>% as_tibble() %>%

arrange(hive, treatment) %>%

mutate(outcome_numeric = as.numeric(outcome),

hive = as.character(hive),

treatment = factor(treatment, levels = exp1_treatments)) %>%

left_join(durations, by = "hive")Inspect the raw data

Click the three tabs to see each table.

Sample sizes by treatment

sample_sizes <- data_for_categorical_model %>%

group_by(treatment) %>%

summarise(n = n(), .groups = "drop")

sample_sizes %>%

kable() %>% kable_styling(full_width = FALSE)| treatment | n |

|---|---|

| Intact control | 321 |

| Ringers | 153 |

| LPS | 368 |

Sample sizes by treatment and hive

data_for_categorical_model %>%

group_by(hive, treatment) %>%

summarise(n = n(), .groups = "drop") %>%

spread(treatment, n) %>%

kable() %>% kable_styling(full_width = FALSE)| hive | Intact control | Ringers | LPS |

|---|---|---|---|

| Garden | 77 | 41 | 71 |

| SkyLab | 105 | NA | 101 |

| Zoology | 106 | 80 | 134 |

| Zoology_2 | 33 | 32 | 62 |

Oberved outcomes

outcome_tally %>%

spread(outcome, n) %>%

kable(digits = 3) %>% kable_styling(full_width = FALSE) | hive | treatment | Stayed inside the hive | Left voluntarily | Forced out |

|---|---|---|---|---|

| Garden | Intact control | 75 | 0 | 2 |

| Garden | Ringers | 41 | 0 | 0 |

| Garden | LPS | 71 | 0 | 0 |

| SkyLab | Intact control | 102 | 3 | 0 |

| SkyLab | LPS | 82 | 12 | 7 |

| Zoology | Intact control | 105 | 0 | 1 |

| Zoology | Ringers | 74 | 1 | 5 |

| Zoology | LPS | 119 | 3 | 12 |

| Zoology_2 | Intact control | 24 | 1 | 8 |

| Zoology_2 | Ringers | 23 | 1 | 8 |

| Zoology_2 | LPS | 37 | 3 | 22 |

Preliminary GLMM

The multinomial model below is not commonly used, though we believe it is the right choice for this particular experiment (e.g. because it can model a three-item categorical response variable, and it can incorporate priors). However during peer review, we were asked whether the results were similar when using standard statistical methods. To address this question, we here present a frequentist Generalised Linear Mixed Model (GLMM; using lme4::glmer), which tests the null hypothesis that the proportion of bees exiting the hive (i.e. the proportion leaving voluntarily plus those that were forced out) is equal between treatment groups and hives.

The model’s qualitative results are similar to those from the multinomial model: bees treated with LPS left the hive more often than intact controls (and there was a non-significant trend for more LPS bees to leave than Ringers bees), and there was variation between hives in the proportion of bees leaving.

Parameter estimates from the GLMM

With Intact control as the reference level

Note that the intact and Ringers controls are not different, but the intact control differs from the LPS treatment.

glmm_data <- outcome_tally %>%

group_by(hive, treatment, outcome) %>%

summarise(n = sum(n)) %>%

mutate(left = ifelse(outcome == "Stayed inside the hive", "stayed_inside" ,"left_hive")) %>%

group_by(hive, treatment, left) %>%

summarise(n = sum(n)) %>%

spread(left, n)

simple_model <- glmer(

cbind(left_hive, stayed_inside) ~ treatment + (1 | hive),

data = glmm_data,

family = "binomial")

summary(simple_model)Generalized linear mixed model fit by maximum likelihood (Laplace Approximation) ['glmerMod']

Family: binomial ( logit )

Formula: cbind(left_hive, stayed_inside) ~ treatment + (1 | hive)

Data: glmm_data

AIC BIC logLik deviance df.resid

70.0 71.6 -31.0 62.0 7

Scaled residuals:

Min 1Q Median 3Q Max

-1.3801 -0.9123 -0.1932 0.6095 1.9146

Random effects:

Groups Name Variance Std.Dev.

hive (Intercept) 1.622 1.273

Number of obs: 11, groups: hive, 4

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -3.2470 0.7038 -4.614 3.95e-06 ***

treatmentRingers 0.7016 0.4086 1.717 0.0859 .

treatmentLPS 1.2881 0.3095 4.162 3.16e-05 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr) trtmnR

trtmntRngrs -0.260

treatmntLPS -0.335 0.586With Ringers as the reference level

Note that the intact and Ringers controls are not different as before. Moreover the Ringers and LPS treatment do not differ significantly.

simple_model <- glmer(

cbind(left_hive, stayed_inside) ~ treatment + (1 | hive),

data = glmm_data %>%

mutate(treatment = relevel(treatment, ref = "Ringers")),

family = "binomial")

summary(simple_model)Generalized linear mixed model fit by maximum likelihood (Laplace Approximation) ['glmerMod']

Family: binomial ( logit )

Formula: cbind(left_hive, stayed_inside) ~ treatment + (1 | hive)

Data: glmm_data %>% mutate(treatment = relevel(treatment, ref = "Ringers"))

AIC BIC logLik deviance df.resid

70.0 71.6 -31.0 62.0 7

Scaled residuals:

Min 1Q Median 3Q Max

-1.3801 -0.9123 -0.1932 0.6095 1.9146

Random effects:

Groups Name Variance Std.Dev.

hive (Intercept) 1.622 1.273

Number of obs: 11, groups: hive, 4

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -2.5454 0.7158 -3.556 0.000377 ***

treatmentIntact control -0.7016 0.4086 -1.717 0.085946 .

treatmentLPS 0.5865 0.3383 1.733 0.083022 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr) trtmIc

trtmntIntcc -0.315

treatmntLPS -0.376 0.671Inspect Type II Anova table

Anova(simple_model, test = "Chisq", Type = "II")Analysis of Deviance Table (Type II Wald chisquare tests)

Response: cbind(left_hive, stayed_inside)

Chisq Df Pr(>Chisq)

treatment 18.115 2 0.0001165 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Baysian multinomial model

Fit the model

Fit the multinomial logistic models, with a 3-item response variable describing what happened to each bee introduced to the hive: stayed inside, left voluntarily, or forced out by the other workers.

if(!file.exists("output/exp1_model.rds")){

prior <- c(set_prior("normal(0, 3)", class = "b", dpar = "mu2"),

set_prior("normal(0, 3)", class = "b", dpar = "mu3"),

set_prior("normal(0, 1)", class = "sd", dpar = "mu2", group = "hive"),

set_prior("normal(0, 1)", class = "sd", dpar = "mu3", group = "hive"))

exp1_model <- brm(

outcome_numeric ~ treatment + (1 | hive),

data = data_for_categorical_model,

prior = prior,

family = "categorical",

chains = 4, cores = 1, iter = 5000, seed = 1)

saveRDS(exp1_model, "output/exp1_model.rds")

}

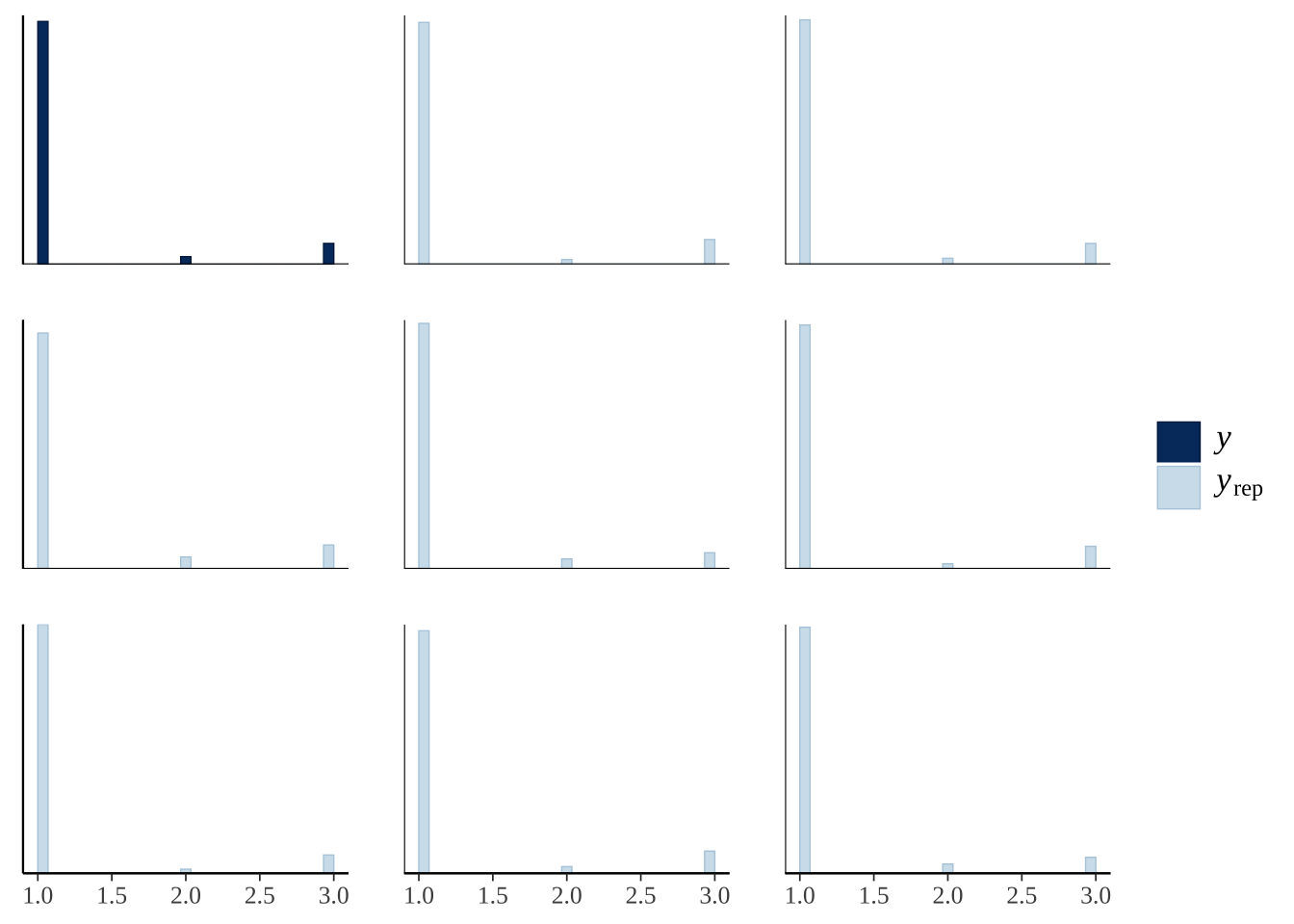

exp1_model <- readRDS("output/exp1_model.rds")Posterior predictive check

This plot shows ten predictions from the posterior (pale blue) as well as the original data (dark blue), for the three categorical outcomes (1: stayed inside, 2: left voluntarily, 3: forced out). The predicted number of bees in each outcome category is similar to the real data, illustrating that the model is able to recapitulate the original data fairly closely (a necessary requirement for making inferences from the model).

pp_check(exp1_model, type = "hist", nsamples = 8)

Parameter estimates from the model

Output of the Bayesian multinomial logistic model

summary(exp1_model) Family: categorical

Links: mu2 = logit; mu3 = logit

Formula: outcome_numeric ~ treatment + (1 | hive)

Data: data_for_categorical_model (Number of observations: 842)

Samples: 4 chains, each with iter = 5000; warmup = 2500; thin = 1;

total post-warmup samples = 10000

Group-Level Effects:

~hive (Number of levels: 4)

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

sd(mu2_Intercept) 1.34 0.51 0.55 2.51 1.00 6513 6060

sd(mu3_Intercept) 1.39 0.43 0.74 2.38 1.00 5443 6887

Population-Level Effects:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

mu2_Intercept -4.94 0.93 -6.97 -3.30 1.00 4805 5207

mu3_Intercept -3.59 0.81 -5.24 -2.00 1.00 3995 5108

mu2_treatmentRingers 0.59 0.97 -1.43 2.38 1.00 9475 6896

mu2_treatmentLPS 1.57 0.56 0.55 2.73 1.00 11154 7439

mu3_treatmentRingers 0.60 0.46 -0.31 1.50 1.00 8390 7922

mu3_treatmentLPS 1.16 0.37 0.46 1.93 1.00 7964 7060

Samples were drawn using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).Formatted brms output for Table S1

The code chunk below wrangles the output of the summary() function for brms models into a more readable table of results, and also adds ‘Bayesian p-values’ (i.e. the posterior probability that the true effect size has the same sign as the reported effect).

Table S1: Table summarising the posterior estimates of each fixed effect in the Bayesian multinomial logistic model of Experiment 1. Because there were three possible outcomes for each bee (Stayed inside, Left voluntarily, or Forced out), there are two parameter estimates for each predictor in the model. ‘Treatment’ is a fixed factor with three levels, and the effects shown here are expressed relative to the ‘Intact control’ group. The \(p\) column gives the posterior probability that the true effect size is opposite in sign to what is reported in the Estimate column, similarly to a \(p\)-value.

tableS1 <- get_fixed_effects_with_p_values(exp1_model) %>%

mutate(mu = map_chr(str_extract_all(Parameter, "mu[:digit:]"), ~ .x[1]),

Parameter = str_remove_all(Parameter, "mu[:digit:]_"),

Parameter = str_replace_all(Parameter, "treatment", "Treatment: ")) %>%

arrange(mu) %>%

select(-mu, -Rhat, -Bulk_ESS, -Tail_ESS) %>%

mutate(PP = format(round(PP, 4), nsmall = 4))

names(tableS1)[3:5] <- c("Est. Error", "Lower 95% CI", "Upper 95% CI")

saveRDS(tableS1, file = "figures/tableS1.rds")

tableS1 %>%

kable(digits = 3) %>%

kable_styling(full_width = FALSE) %>%

pack_rows("% bees leaving voluntarily", 1, 3) %>%

pack_rows("% bees forced out", 4, 6)| Parameter | Estimate | Est. Error | Lower 95% CI | Upper 95% CI | PP | |

|---|---|---|---|---|---|---|

| % bees leaving voluntarily | ||||||

| Intercept | -4.937 | 0.929 | -6.969 | -3.300 | 0.0000 | *** |

| Treatment: Ringers | 0.590 | 0.968 | -1.426 | 2.375 | 0.2570 | |

| Treatment: LPS | 1.571 | 0.555 | 0.555 | 2.731 | 0.0007 | *** |

| % bees forced out | ||||||

| Intercept | -3.586 | 0.812 | -5.244 | -1.999 | 0.0000 | *** |

| Treatment: Ringers | 0.596 | 0.458 | -0.310 | 1.497 | 0.0977 | ~ |

| Treatment: LPS | 1.164 | 0.369 | 0.462 | 1.929 | 0.0001 | *** |

Plotting estimates from the model

Derive prediction from the posterior

get_posterior_preds <- function(focal_hive){

new <- expand.grid(

treatment = levels(data_for_categorical_model$treatment),

hive = focal_hive)

preds <- fitted(exp1_model, newdata = new, summary = FALSE)

dimnames(preds) <- list(NULL, new[,1], NULL)

rbind(

as.data.frame(preds[,, 1]) %>%

mutate(outcome = "Stayed inside the hive", posterior_sample = 1:n()),

as.data.frame(preds[,, 2]) %>%

mutate(outcome = "Left voluntarily", posterior_sample = 1:n()),

as.data.frame(preds[,, 3]) %>%

mutate(outcome = "Forced out", posterior_sample = 1:n())) %>%

gather(treatment, prop, `Intact control`, Ringers, LPS) %>%

mutate(outcome = factor(outcome,

c("Stayed inside the hive", "Left voluntarily", "Forced out")),

treatment = factor(treatment,

c("Intact control", "Ringers", "LPS"))) %>%

as_tibble() %>% arrange(treatment, outcome)

}

# plotting data for panel A: one specific hive

plotting_data <- get_posterior_preds(focal_hive = "Zoology")

# stats data: for panel B and the table of stats

stats_data <- get_posterior_preds(focal_hive = NA)Make Figure 1

cols <- RColorBrewer::brewer.pal(3, "Set2")

# Make panel A, showing the raw data:

all_hives <- outcome_tally %>%

group_by(treatment, outcome) %>%

summarise(n = sum(n), .groups = "drop") %>%

ungroup() %>% mutate(hive = "All hives")

pd <- position_dodge(.3)

raw_plot <- outcome_tally %>%

group_by(treatment, outcome) %>%

summarise(n = sum(n), .groups = "drop") %>% mutate() %>%

group_by(treatment) %>%

mutate(total_n = sum(n),

percent = 100 * n / sum(n),

SE = sqrt(total_n * (percent/100) * (1-(percent/100)))) %>%

ungroup() %>%

mutate(lowerCI = map_dbl(1:n(), ~ 100 * binom.test(n[.x], total_n[.x])$conf.int[1]),

upperCI = map_dbl(1:n(), ~ 100 * binom.test(n[.x], total_n[.x])$conf.int[2])) %>%

filter(outcome != "Stayed inside the hive") %>%

mutate(treatment = paste(treatment, "\n(n = ", total_n, ")", sep = ""),

treatmen = factor(treatment, unique(treatment))) %>%

ggplot(aes(treatment, percent, fill = outcome)) +

geom_errorbar(aes(ymin=lowerCI, ymax=upperCI), position = pd, width = 0) +

geom_point(stat = "identity", position = pd, colour = "grey15", pch = 21, size = 4) +

scale_fill_manual(values = cols[2:3], name = "") +

xlab("Treatment") + ylab("% bees (\u00B1 95% CIs)") +

theme_bw() +

theme(text = element_text(family = nice_font)) +

coord_flip()

dot_plot <- plotting_data %>%

left_join(sample_sizes, by = "treatment") %>%

arrange(treatment) %>%

mutate(outcome = str_replace_all(outcome, "Stayed inside the hive", "Stayed inside"),

outcome = factor(outcome, c("Stayed inside", "Left voluntarily", "Forced out"))) %>%

ggplot(aes(100 * prop, treatment)) +

stat_dotsh(quantiles = 100, fill = "grey40", colour = "grey40") +

stat_pointintervalh(aes(colour = outcome, fill = outcome),

.width = c(0.5, 0.95),

position = position_nudge(y = -0.07),

point_colour = "grey26", pch = 21, stroke = 0.4) +

scale_colour_manual(values = cols) +

scale_fill_manual(values = cols) +

facet_wrap( ~ outcome, scales = "free_x") +

xlab("% bees (posterior estimate)") + ylab("Treatment") +

theme_bw() +

coord_cartesian(ylim=c(1.4, 3)) +

theme(

text = element_text(family = nice_font),

strip.background = element_rect(fill = "#eff0f1"),

panel.grid.major.y = element_blank(),

legend.position = "none"

)

# positive effect = odds of this outcome are higher for trt2 than trt1 (put control as trt1)

get_log_odds <- function(trt1, trt2){

log((trt2 / (1 - trt2) / (trt1 / (1 - trt1))))

}

LOR <- stats_data %>%

spread(treatment, prop) %>%

mutate(LOR_intact_Ringers = get_log_odds(`Intact control`, Ringers),

LOR_intact_LPS = get_log_odds(`Intact control`, LPS),

LOR_Ringers_LPS = get_log_odds(Ringers, LPS)) %>%

select(posterior_sample, outcome, starts_with("LOR")) %>%

gather(LOR, comparison, starts_with("LOR")) %>%

mutate(LOR = str_remove_all(LOR, "LOR_"),

LOR = str_replace_all(LOR, "Ringers_LPS", "LPS\n(vs Ringers)"),

LOR = str_replace_all(LOR, "intact_Ringers", "Ringers\n(vs Intact control)"),

LOR = str_replace_all(LOR, "intact_LPS", "LPS\n(vs Intact control)"))

levs <- LOR$LOR %>% unique() %>% sort()

LOR$LOR <- factor(LOR$LOR, rev(levs[c(3,5,4,2,1,6)]))

LOR_plot <- LOR %>%

mutate(outcome = str_replace_all(outcome, "Stayed inside the hive", "Stayed inside"),

outcome = factor(outcome, levels = rev(c("Forced out", "Left voluntarily", "Stayed inside")))) %>%

ggplot(aes(y = comparison, x = LOR, colour = outcome)) +

geom_hline(yintercept = 0, size = 0.3, colour = "grey20") +

geom_hline(yintercept = log(2), linetype = 2, size = 0.6, colour = "grey") +

geom_hline(yintercept = -log(2), linetype = 2, size = 0.6, colour = "grey") +

stat_pointinterval(aes(colour = outcome, fill = outcome),

.width = c(0.5, 0.95),

position = position_dodge(0.6),

point_colour = "grey26", pch = 21, stroke = 0.4) +

scale_colour_manual(values = cols) +

scale_fill_manual(values = cols) +

ylab("Effect size (log odds ratio)") +

xlab("Treatment (reference)") +

theme_bw() +

coord_flip() +

theme(

text = element_text(family = nice_font),

panel.grid.major.y = element_blank(),

legend.position = "none"

)

empty <- ggplot() + theme_void()

top <- cowplot::plot_grid(plotlist = list(empty, raw_plot, empty), nrow = 1,

labels = c("", "A", ""), rel_widths = c(0.021, 0.8,0.179))

bottom <- cowplot::plot_grid(

plotlist = list(dot_plot, LOR_plot),

labels = c("B", "C"),

nrow = 1, align = 'v', axis = 'l',

rel_heights = c(1.4, 1))

p <- cowplot::plot_grid(top, bottom, nrow = 2, align = 'hv', axis = 'l')

ggsave(plot = p, filename = "figures/fig1.pdf", height = 5.4, width = 9)

p

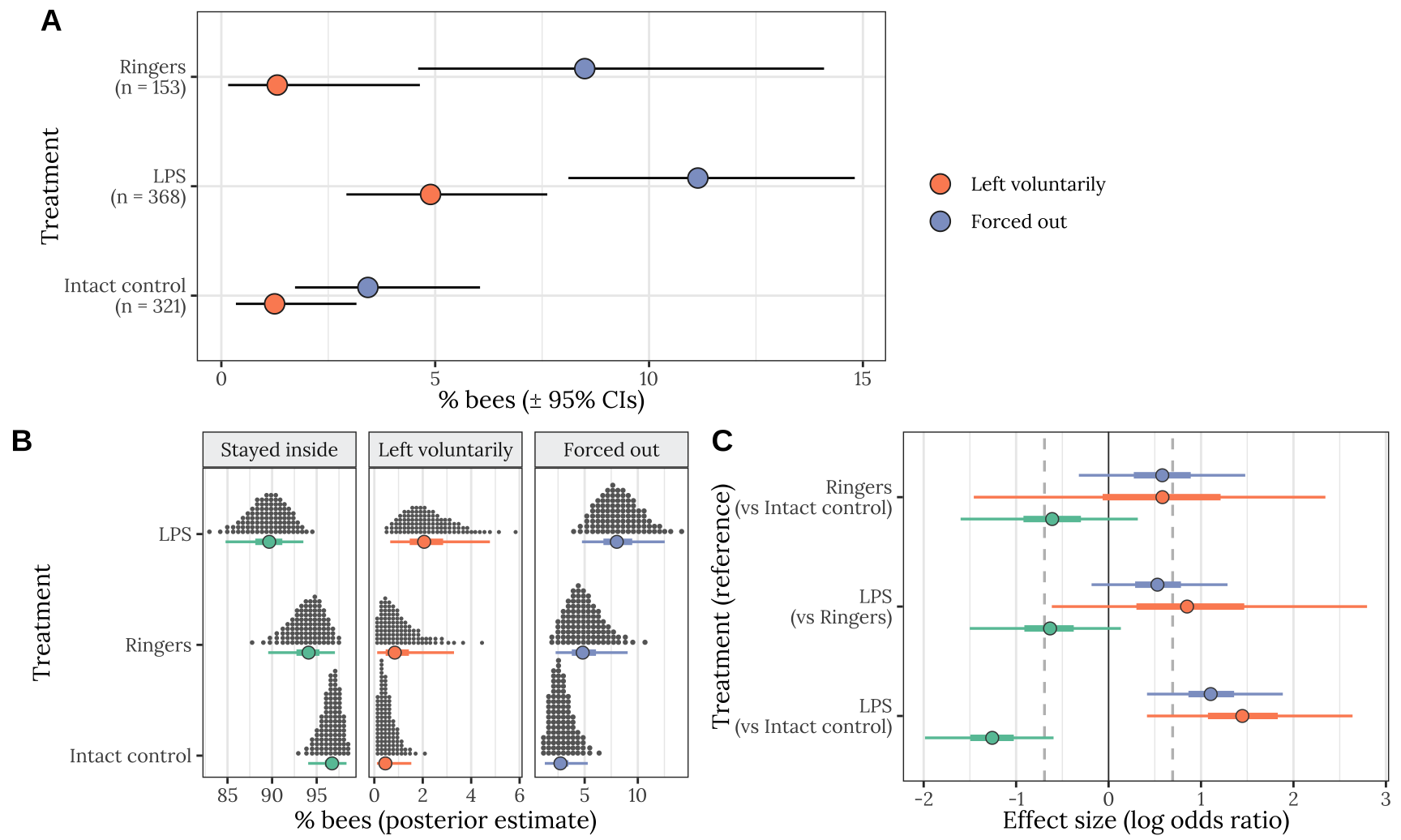

Figure 1: Results of Experiment 1 (n = 842 bees). Panel A shows summary statistics of the raw data, i.e. the percentage of bees leaving or being forced out of the hive (\(\pm\) 95% confidence intervals), while Panels B-C show estimates from the Bayesian MLM. Panel B shows the posterior estimate of % bees staying inside the hive (left), leaving voluntarily (middle), or being forced out (right), for each of the three treatments. The quantile dot plot shows 100 approximately equally likely estimates of the true % bees, and the horizontal bars show the median and the 50% and 95% credible intervals of the posterior distribution. Panel C gives the posterior estimates of the effect size of each treatment, relative to one of the other treatments (whose name appears in parentheses), expressed as a log odds ratio (LOR). Positive LOR indicates that the % bees showing this particular outcome is higher in the treatment than the control; for example, more bees left voluntarily (orange) or were forced out (blue) in the LPS treatment than in the intact control. The vertical lines mark \(LOR = 0\), indicating no effect, and \(LOR = \pm log(2)\), i.e. the point at which the odds are twice as high in one treatment as the other.

Hypothesis testing and effect sizes

This section calculates the posterior difference in treatment group means, in order to perform some null hypothesis testing, calculate effect size (as a log odds ratio), and calculate the 95% credible intervals on the effect size.

The following code chunks perform planned contrasts between pairs of treatments that we consider important to the biological hypotheses under test. For example the contrast between the LPS treatment and the Ringers treatment provides information about the effect of immmune stimulation, while the Ringers - Intact Control contrast provides information about the effect of wounding in the absence of LPS.

Calculate contrasts: % bees staying inside the hive

# Helper function to summarise a posterior, including calculating

# p_direction, i.e. the posterior probability that the effect size has the stated direction,

# which has a similar interpretation to a one-tailed p-value

my_summary <- function(df, columns) {

lapply(columns, function(x){

p <- 1 - (df %>% pull(!! x) %>%

bayestestR::p_direction() %>% as.numeric())

df %>% pull(!! x) %>% posterior_summary() %>% as_tibble() %>%

mutate(PP = p) %>% mutate(Metric = x) %>% select(Metric, everything()) %>%

mutate(` ` = ifelse(PP < 0.1, "~", ""),

` ` = replace(` `, PP < 0.05, "\\*"),

` ` = replace(` `, PP < 0.01, "**"),

` ` = replace(` `, PP < 0.001, "***"),

` ` = replace(` `, PP == " ", ""))

}) %>% do.call("rbind", .)

}

# Helper to make one unit of the big stats table

make_stats_table <- function(

dat, groupA, groupB, comparison, metric){

output <- dat %>%

spread(treatment, prop) %>%

mutate(

metric_here = 100 * (!! enquo(groupB) - !! enquo(groupA)),

`Log odds ratio` = get_log_odds(!! enquo(groupA), !! enquo(groupB))) %>%

my_summary(c("metric_here", "Log odds ratio")) %>%

mutate(PP = c(" ", format(round(PP[2], 4), nsmall = 4)),

` ` = c(" ", ` `[2]),

Comparison = comparison) %>%

select(Comparison, everything()) %>%

mutate(Metric = replace(Metric, Metric == "metric_here", metric))

names(output)[names(output) == "metric_here"] <- metric

output

}

stayed_inside_stats_table <- rbind(

stats_data %>%

filter(outcome == "Stayed inside the hive") %>%

make_stats_table(`Ringers`, `LPS`, "LPS (Ringers)",

metric = "Difference in % bees staying inside"),

stats_data %>%

filter(outcome == "Stayed inside the hive") %>%

make_stats_table(`Intact control`, `LPS`, "LPS (Intact control)",

metric = "Difference in % bees staying inside"),

stats_data %>%

filter(outcome == "Stayed inside the hive") %>%

make_stats_table(`Intact control`, `Ringers`, "Ringers (Intact control)",

metric = "Difference in % bees staying inside")

) %>% as_tibble()

stayed_inside_stats_table[c(2,4,6), 1] <- " "Calculate contrasts: % bees that left voluntarily

voluntary_stats_table <- rbind(

stats_data %>%

filter(outcome == "Left voluntarily") %>%

make_stats_table(`Ringers`, `LPS`,

"LPS (Ringers)",

metric = "Difference in % bees leaving voluntarily"),

stats_data %>%

filter(outcome == "Left voluntarily") %>%

make_stats_table(`Intact control`, `LPS`,

"LPS (Intact control)",

metric = "Difference in % bees leaving voluntarily"),

stats_data %>%

filter(outcome == "Left voluntarily") %>%

make_stats_table(`Intact control`, `Ringers`,

"Ringers (Intact control)",

metric = "Difference in % bees leaving voluntarily")

) %>% as_tibble()

voluntary_stats_table[c(2,4,6), 1] <- " "Calculate contrasts: % bees that were forced out

forced_out_stats_table <- rbind(

stats_data %>%

filter(outcome == "Forced out") %>%

make_stats_table(`Ringers`, `LPS`,

"LPS (Ringers)",

metric = "Difference in % bees forced out"),

stats_data %>%

filter(outcome == "Forced out") %>%

make_stats_table(`Intact control`, `LPS`,

"LPS (Intact control)",

metric = "Difference in % bees forced out"),

stats_data %>%

filter(outcome == "Left voluntarily") %>%

make_stats_table(`Intact control`, `Ringers`, "Ringers (Intact control)",

metric = "Difference in % bees forced out")

) %>% as_tibble()

forced_out_stats_table[c(2,4,6), 1] <- " "Present all contrasts in one table:

Table S2: This table gives statistics associated with each of the contrasts plotted in Figure 1B. Each pair of rows gives the absolute effect size (i.e. the difference in % bees) and standardised effect size (as log odds ratio; LOR) for the focal treatment, relative to the treatment shown in parentheses, for one of the three possible outcomes (Stayed inside, Left voluntarily, or Forced out). A LOR of \(|log(x)|\) indicates that the outcome is \(x\) times more frequent in one treatment compared to the other, e.g. \(log(2) = 0.69\) and \(log(0.5) = -0.69\) correspond to a two-fold difference in frequency. The \(PP\) column gives the posterior probability that the true effect size has the same sign as is shown in the Estimate column; this metric has a similar interpretation to a one-tailed \(p\) value.

tableS2 <- bind_rows(

stayed_inside_stats_table,

voluntary_stats_table,

forced_out_stats_table)

saveRDS(tableS2, file = "figures/tableS2.rds")

tableS2 %>%

kable(digits = 2) %>% kable_styling(full_width = FALSE) %>%

row_spec(seq(2,18,by=2), extra_css = "border-bottom: solid;") %>%

pack_rows("% bees staying inside", 1, 6) %>%

pack_rows("% bees leaving voluntarily", 7, 12) %>%

pack_rows("% bees forced out", 13, 18)| Comparison | Metric | Estimate | Est.Error | Q2.5 | Q97.5 | PP | |

|---|---|---|---|---|---|---|---|

| % bees staying inside | |||||||

| LPS (Ringers) | Difference in % bees staying inside | -6.51 | 6.31 | -22.60 | 1.36 | ||

| Log odds ratio | -0.65 | 0.41 | -1.50 | 0.13 | 0.0499 | * | |

| LPS (Intact control) | Difference in % bees staying inside | -11.22 | 8.65 | -31.20 | -1.02 | ||

| Log odds ratio | -1.27 | 0.35 | -1.99 | -0.60 | 1e-04 | *** | |

| Ringers (Intact control) | Difference in % bees staying inside | -4.70 | 5.88 | -20.79 | 1.56 | ||

| Log odds ratio | -0.62 | 0.48 | -1.60 | 0.32 | 0.0888 | ~ | |

| % bees leaving voluntarily | |||||||

| LPS (Ringers) | Difference in % bees leaving voluntarily | 2.28 | 3.38 | -2.86 | 10.48 | ||

| Log odds ratio | 0.92 | 0.88 | -0.61 | 2.80 | 0.1401 | ||

| LPS (Intact control) | Difference in % bees leaving voluntarily | 3.55 | 3.44 | 0.09 | 12.29 | ||

| Log odds ratio | 1.46 | 0.57 | 0.42 | 2.64 | 0.0030 | ** | |

| Ringers (Intact control) | Difference in % bees leaving voluntarily | 1.27 | 2.91 | -1.94 | 9.76 | ||

| Log odds ratio | 0.55 | 0.97 | -1.46 | 2.35 | 0.2711 | ||

| % bees forced out | |||||||

| LPS (Ringers) | Difference in % bees forced out | 4.23 | 5.59 | -1.18 | 20.53 | ||

| Log odds ratio | 0.54 | 0.37 | -0.18 | 1.29 | 0.0736 | ~ | |

| LPS (Intact control) | Difference in % bees forced out | 7.67 | 8.11 | 0.53 | 28.03 | ||

| Log odds ratio | 1.12 | 0.37 | 0.42 | 1.88 | 4e-04 | *** | |

| Ringers (Intact control) | Difference in % bees forced out | 1.27 | 2.91 | -1.94 | 9.76 | ||

| Log odds ratio | 0.55 | 0.97 | -1.46 | 2.35 | 0.2711 | ||

sessionInfo()R version 4.0.3 (2020-10-10)

Platform: x86_64-apple-darwin17.0 (64-bit)

Running under: macOS Catalina 10.15.7

Matrix products: default

BLAS: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRblas.dylib

LAPACK: /Library/Frameworks/R.framework/Versions/4.0/Resources/lib/libRlapack.dylib

locale:

[1] en_GB.UTF-8/en_GB.UTF-8/en_GB.UTF-8/C/en_GB.UTF-8/en_GB.UTF-8

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] car_3.0-8 carData_3.0-4 cowplot_1.0.0 tidybayes_2.0.3 bayestestR_0.11.5 kableExtra_1.3.4

[7] gridExtra_2.3 forcats_0.5.0 stringr_1.4.0 dplyr_1.0.0 purrr_0.3.4 readr_2.0.0

[13] tidyr_1.1.0 tibble_3.0.1 ggplot2_3.3.2 tidyverse_1.3.0 bayesplot_1.7.2 lme4_1.1-23

[19] Matrix_1.2-18 brms_2.14.4 Rcpp_1.0.4.6 showtext_0.9-2 showtextdb_3.0 sysfonts_0.8.3

[25] workflowr_1.6.2

loaded via a namespace (and not attached):

[1] readxl_1.3.1 backports_1.1.7 systemfonts_0.2.2 plyr_1.8.6 igraph_1.2.5

[6] svUnit_1.0.3 splines_4.0.3 crosstalk_1.1.0.1 TH.data_1.0-10 rstantools_2.1.1

[11] inline_0.3.15 digest_0.6.25 htmltools_0.5.0 rsconnect_0.8.16 fansi_0.4.1

[16] magrittr_2.0.1 openxlsx_4.1.5 tzdb_0.1.2 modelr_0.1.8 RcppParallel_5.0.1

[21] matrixStats_0.56.0 vroom_1.5.3 svglite_1.2.3 xts_0.12-0 sandwich_2.5-1

[26] prettyunits_1.1.1 colorspace_1.4-1 blob_1.2.1 rvest_0.3.5 haven_2.3.1

[31] xfun_0.26 callr_3.4.3 crayon_1.3.4 jsonlite_1.7.0 survival_3.2-7

[36] zoo_1.8-8 glue_1.4.2 gtable_0.3.0 emmeans_1.4.7 webshot_0.5.2

[41] V8_3.4.0 pkgbuild_1.0.8 rstan_2.21.2 abind_1.4-5 scales_1.1.1

[46] mvtnorm_1.1-0 DBI_1.1.0 miniUI_0.1.1.1 viridisLite_0.3.0 xtable_1.8-4

[51] bit_1.1-15.2 foreign_0.8-80 stats4_4.0.3 StanHeaders_2.21.0-3 DT_0.13

[56] datawizard_0.2.1 htmlwidgets_1.5.1 httr_1.4.1 threejs_0.3.3 RColorBrewer_1.1-2

[61] arrayhelpers_1.1-0 ellipsis_0.3.1 farver_2.0.3 pkgconfig_2.0.3 loo_2.3.1

[66] dbplyr_1.4.4 labeling_0.3 tidyselect_1.1.0 rlang_0.4.6 reshape2_1.4.4

[71] later_1.0.0 munsell_0.5.0 cellranger_1.1.0 tools_4.0.3 cli_2.0.2

[76] generics_0.0.2 broom_0.5.6 ggridges_0.5.2 evaluate_0.14 fastmap_1.0.1

[81] yaml_2.2.1 bit64_0.9-7 processx_3.4.2 knitr_1.32 fs_1.4.1

[86] zip_2.1.1 nlme_3.1-149 whisker_0.4 mime_0.9 projpred_2.0.2

[91] xml2_1.3.2 rstudioapi_0.11 compiler_4.0.3 shinythemes_1.1.2 curl_4.3

[96] gamm4_0.2-6 reprex_0.3.0 statmod_1.4.34 stringi_1.5.3 highr_0.8

[101] ps_1.3.3 Brobdingnag_1.2-6 gdtools_0.2.2 lattice_0.20-41 nloptr_1.2.2.1

[106] markdown_1.1 shinyjs_1.1 vctrs_0.3.0 pillar_1.4.4 lifecycle_0.2.0

[111] jquerylib_0.1.1 bridgesampling_1.0-0 estimability_1.3 data.table_1.12.8 insight_0.14.5

[116] httpuv_1.5.3.1 R6_2.4.1 promises_1.1.0 rio_0.5.16 codetools_0.2-16

[121] boot_1.3-25 colourpicker_1.0 MASS_7.3-53 gtools_3.8.2 assertthat_0.2.1

[126] rprojroot_1.3-2 withr_2.2.0 shinystan_2.5.0 multcomp_1.4-13 mgcv_1.8-33

[131] parallel_4.0.3 hms_0.5.3 grid_4.0.3 coda_0.19-3 minqa_1.2.4

[136] rmarkdown_2.11 git2r_0.27.1 shiny_1.4.0.2 lubridate_1.7.10 base64enc_0.1-3

[141] dygraphs_1.1.1.6